Garbage In, Garbage Out

AI thinks what it's told

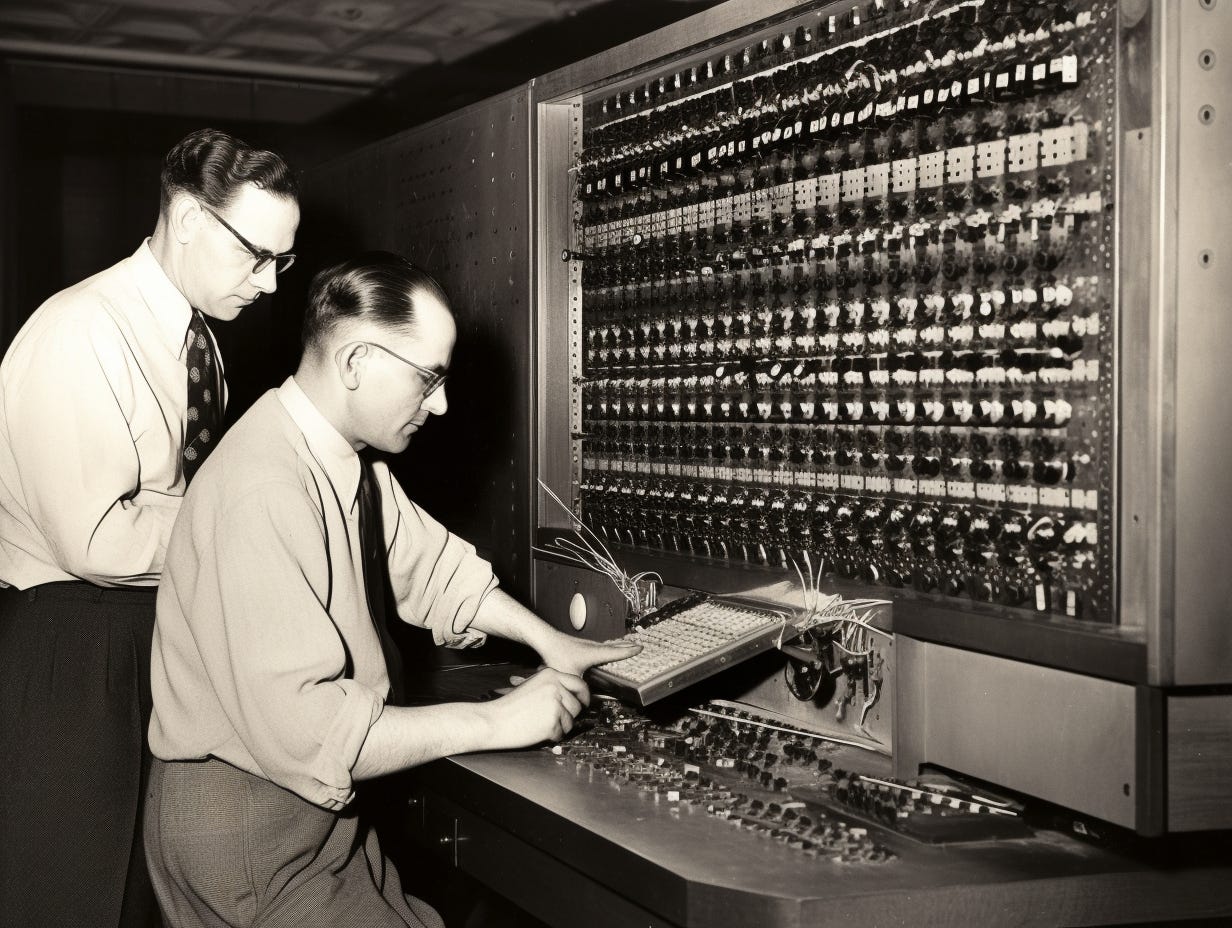

Like virtually every invention since the telephone, ChatGPT and other generative AI products are little more than black boxes for most of us. Push a button, and something cool happens. How or why does it happen? We have no idea.

As the chatter about AI grows to a deafening roar, this attitude of blithe ignorance is becoming untenable. It is vital that the rest of us (i.e., non-computer-scientists) grasp some of the basic principles that underpin this technology.

ChatGPT and other AI chatbots belong to a class of applications known as Large Language Models (LLMs), which are “trained” to write and answer questions by analyzing a dataset containing thousands of examples of text.

Collecting and labeling such a dataset is time-consuming and expensive, as it requires a significant amount of human effort. That’s why a relatively small number of models - especially ChatGPT - are used as the basis for a vast array of applications.

The dataset consists of selected, carefully screened data, but there is no transparency regarding who is doing the selecting, or what they're screening for.

This means that, since AI chatbots are trained to “think” based on the information provided to them, any biases present in that information become part of the LLM’s fundamental architecture. In other words, it becomes ideologically hard-wired.

Since even the New York Times agrees that Silicon Valley is overwhelmingly aligned with left-wing ideology, it is reasonable to suspect that its chatbots are trained on data that reflects this bias. Indeed, simple experiments, such as asking ChatGPT to write poems about US Presidents, support this suspicion.

When prompted for a haiku about Donald Trump, it provided this:

Golden-haired tycoon,

Divisive, polarizing,

Leaves a lasting mark.

When prompted for a haiku about Joe Biden, it provided this:

Statesman with white hair,

Leading with hope and resolve,

New path he paves.

Similarly, almost any question about a politically controversial topic will provoke an answer with a fairly obvious left-wing bias. Try it for yourself.

While conservative commentators have been openly critical of this, pundits on the left have looked askance at some of ChatGPT’s politically incorrect conclusions (such as those about terrorism prevention), and demanded that it needs to become even more “woke” to gain commercial acceptance. In doing so, they are openly acknowledging that AI is trained to think a certain way, and they want to make sure it’s their way.

All technology has limitations. You can’t use a hammer to unscrew a screw, and you can’t use a pocket calculator to write a novel. But AI is different: its limitations are invisible. It looks like a black box that provides you with answers and information that are neutral and factual. In reality, it’s a black box that pushes you to think the way it thinks. The way it was trained to think.

Hi Alex, nice post, but the "garbage in, garbage out" of AI only matters if the intention is to use AI for humanity's benefit, to supercharge technological innovation and the like. And maybe it will be used that way, but as a far secondary consideration imo.

Instead, I think the primary purpose of AI will be to entrench existing elite control. In other words, AI will be refined and perfected for a primary purpose of widespread censorship against the masses, enforcement of upcoming CBDCs, and implementation of a social credit score system, which will be scored based on a scan and analysis of all of your electronic communications, which the NSA has stored in their databases (every phone call you've ever made, anything you've ever typed into your computer, every internet search, every text, every email, who your friends are, what you read, etc). Everyone trying out ChatGPT and trying to get it to break the woke guardrails (“haha guys look what I made ChatGPT say, lol isn’t this funny!”) is inadvertently helping refine the final product so that there are as few workarounds as possible to the created guardrails, and our rulers hope it will be effectively zero.

One of the more concerning things I've read about the LLMs (and why I'm not convinced they will even be controllable as a tool of oppression) is that the creators themselves aren't entirely sure what's going on inside that black box; the "learning" capabilities of some of these AIs are surprising even them. Either way, I think they pose a not-insignificant threat to humanity. How large? It seems we're gonna find out.